Most of this, if not all is copy/paste (italicized) from Scale Agile Framework website. I may put it in my own words from time to time.

© Scaled Agile, Inc.

Reference https://www.scaledagileframework.com/iteration-execution/

Reference https://www.scaledagileframework.com/iteration-execution/

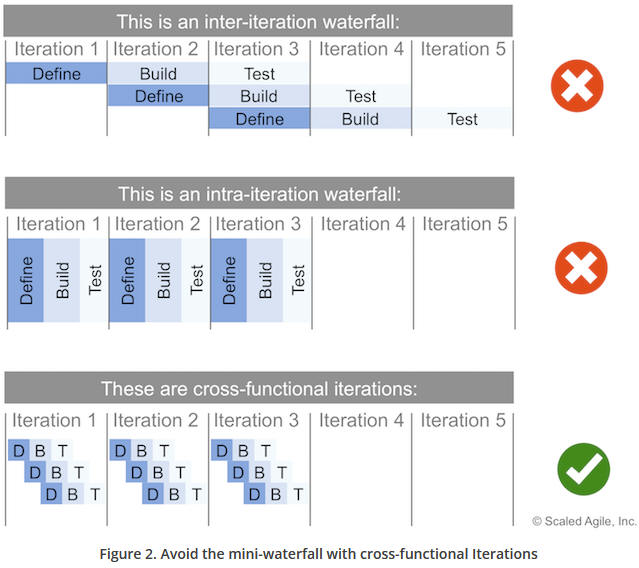

Avoiding the Intra-iteration Waterfall

Teams should avoid the tendency to waterfall the

iteration and instead ensure that they are completing multiple define-build-test

cycles in the course of the iteration, as Figure 2 illustrates.

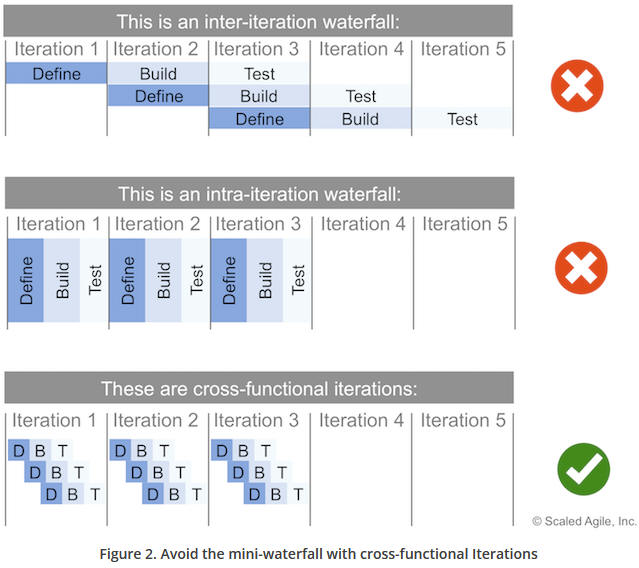

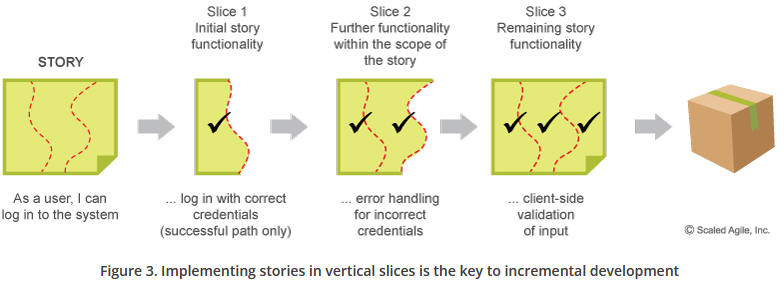

Figure 3 illustrates how implementing

stories in thin, vertical slices is the foundation for incremental development,

integration, and testing.

Building stories this way enables a short feedback cycle and allows Agile teams

to operate with a smaller increment of the working system, supporting continuous

integration and testing. It allows team members to refine their understanding of

the functionality, and it facilitates pairing and more frequent integration of

working systems. The dependencies within and across teams and even trains can be

managed more effectively, as the dependent teams can consume the new

functionality sooner. Incrementally implementing stories helps reduce

uncertainty and risk, validates architectural and design decisions, and promotes

early learning and knowledge sharing.

Reference: https://www.scaledagileframework.com/team-backlog/

The Team Backlog contains user and enabler Stories that originate from the Program Backlog, as well as stories that arise locally from the team’s local context. It may include other work items as well, representing all the things a team needs to do to advance their portion of the system.

The Product Owner (PO) is

responsible for the team backlog. Since it includes both user Stories and

enablers, it’s essential to allocate capacity in a way that balances

investments across conflicting needs. This capacity allocation takes into

account both the needs of the team and the Agile Release Train (ART).

The team backlog must always contain some stories that are ready for implementation without significant risk or surprise. Agile teams take a flow-based approach to maintain this level of backlog readiness, typically by having at least one team backlog refinement event per iteration (or even one per week). Backlog refinement looks at upcoming stories (and features, as appropriate) to discuss, estimate, and establish an initial understanding of acceptance criteria. Teams may apply Behavior-Driven Development, using and use specific examples to help clarify stories.

The backlog refinement process is

continuous and should not be limited to a single event timebox. Teams may

revisit a story multiple times before finalizing and committing to it in

Iteration

Planning. Also, as multiple teams are doing backlog refinement, new issues,

dependencies, and stories are likely to result. In this way, backlog refinement

helps surface problems with the current plan, which will come under discussion

in ART sync events.

Reference: https://www.scaledagileframework.com/iteration-planning/

Iteration Planning is an event where all team members determine how much of the Team Backlog they can commit to delivering during an upcoming Iteration. The team summarizes the work as a set of committed Iteration Goals.

Teams plan by selecting Stories from the Team backlog and committing to execute a set of them in the upcoming Iteration. The team’s backlog has been seeded and partially planned during Program Increment (PI) Planning. In addition, the teams have feedback—not only from their prior iterations but also from the System Demo and other teams they are working with. That, and the natural course of changing fact patterns, provides the broader context for iteration planning. The output of iteration planning is:

The iteration backlog, consisting of the stories committed to for the iteration, with clearly defined acceptance criteria

A statement of Iteration goals, typically a sentence or two for each one, stating the business objectives of the iteration

A commitment by the team to the work needed to achieve the goals

Prior to iteration planning, the Product Owner (PO) will have prepared some preliminary iteration goals, based on the team’s progress in the Program Increment (PI) so far. Typically, the Product Owner starts the event by reviewing the proposed iteration goals and the higher-priority stories in the team backlog. During iteration planning, the Agile team discusses implementation options, technical issues, Nonfunctional Requirements (NFRs), and dependencies, then plans the iteration. The Product Owner defines the ‘what’; the team defines ‘how’ and ‘how much’.

Throughout iteration planning, the team elaborates the acceptance criteria for each story and estimates the effort to complete each one. Based on their available capacity for the iteration, the team then selects the candidate stories. Some teams break each story down into tasks and forecast them in hours to confirm that they have the capacity and skills to complete them. Once this process is complete, the team commits to the work and records the iteration backlog in a visible place, such as a storyboard, Kanban board or Agile project management tooling. Planning is timeboxed to a maximum of four hours for a two-week iteration.

First, the team quantifies their capacity to perform work in the upcoming iteration. Each team member determines their availability, acknowledging time off and other potential duties. This activity also takes into account other standing commitments—such as maintenance—that is distinct from new story development (see the section about capacity allocation in the Team Backlog article). Using their historical velocity as a starting point, the team makes adjustments based on the unavailable time for each team member to determine the actual capacity for the iteration.

Once team capacity has been established, the team backlog is reviewed. Each story is discussed, covering relative difficulty, size, complexity, uncertainty, technical challenges, and acceptance criteria. Teams often use Behavior-Driven Development (BDD) to ensure a shared understanding of each story’s specific behavior. Finally, the team agrees to a size estimate for the story. There are typically other types of stories on the team backlog as well, including Enablers that could constitute infrastructure work, research Spikes, and architectural improvements, as well as refactoring work and defects. These items are also prioritized and estimated.

Some teams break each story into tasks. As the tasks are identified, team members discuss each one: who would be the best person(s) to accomplish it, approximately how long it will take (typically in hours), and any dependencies it may have on other tasks or stories. Once all this is understood, a team member takes responsibility for a specific task or tasks. As team members commit to tasks, they reduce their individual iteration capacity until it reaches zero. Often, toward the end of the session, some team members will find themselves overcommitted, while others will have some of their capacity still available. This situation leads to a further discussion among team members to evenly distribute the work.

While breaking stories into tasks is fairly common, it is optional and not mandated in SAFe. It is mostly used by teams to better understand their capacity and capabilities, and over time may become unnecessary, with the team planning only at the story level.

Once the iteration backlog is understood, the team turns their attention to synthesizing one or more iteration goals that summarize the work the team aims to accomplish in that iteration. They are based on the iteration backlog as well as the team and program PI objectives from the PI planning event. The closer this iteration is to the PI planning session, the more likely the PI objectives will remain unchanged.

When the team’s capacity has been reached, in terms of committed stories, no more stories are pulled from the team backlog. At this point, the Product Owner and team agree on the final list of stories that will be selected, and they revisit and restate the iteration goals. The entire team then commits to the iteration goals, and the scope of the work remains fixed for the duration of the iteration.

Attendees of the iteration planning event include:

The Product Owner

The Scrum Master, who acts as the facilitator for this event

All team members

Any other stakeholders as required, including representatives from different Agile teams or the ART, and subject matter experts

An example agenda for iteration planning follows:

Calculate the available team capacity for the iteration.

Discuss each story, elaborate acceptance criteria, and provide estimates using story points.

Planning stops once the team runs out of capacity.

Determine and agree on the iteration goals.

Everyone commits to the goals.

Acceptance criteria are developed through conversation and collaboration with the Product Owner and other stakeholders. Based on the story estimates, the Product Owner may change the ranking of the stories.

Below are some tips for holding an iteration planning event:

Timebox the event to 4 hours or less

Iteration planning is organized by the team and is for the team

A team should avoid committing to work that exceeds its historical velocity

Reference: https://www.scaledagileframework.com/nonfunctional-requirements/

Nonfunctional Requirements (NFRs) define system attributes such as security,

reliability, performance, maintainability, scalability, and usability. They

serve as constraints or restrictions on the design of the system across the

different backlogs.

Reference: https://www.scaledagileframework.com/story/

Stories are short descriptions of a small piece of desired functionality, written in the user’s language. Agile Teams implement small, vertical slices of system functionality and are sized so they can be completed in a single Iteration.

Stories are the primary artifact used to define system behavior in Agile. They are short, simple descriptions of functionality usually told from the user’s perspective and written in their language. Each one is intended to enable the implementation of a small, vertical slice of system behavior that supports incremental development.

Stories provide just enough information for both business and technical people to understand the intent. Details are deferred until the story is ready to be implemented. Through acceptance criteria and acceptance tests, stories get more specific, helping to ensure system quality.

User stories deliver functionality directly to the end user. Enabler stories bring visibility to the work items needed to support exploration, architecture, infrastructure, and compliance.

Ron Jeffries, one of the inventors of XP, is credited with describing the 3Cs of a story:

Card – Captures the user story’s statement of intent using an index card, sticky note, or tool. Index cards provide a physical relationship between the team and the story. The card size physically limits story length and premature suggestions for the specificity of system behavior. Cards also help the team ‘feel’ upcoming scope, as there is something materially different about holding ten cards in one’s hand versus looking at ten lines on a spreadsheet.

Conversation – Represents a “promise for a conversation” about the story between the team, customer (or the customer’s proxy), the PO (who may be representing the customer), and other stakeholders. The discussion is necessary to determine more detailed behavior required to implement the intent. The conversation may spawn additional specificity in the form of acceptance criteria (the confirmation below) or attachments to the user story. The conversation spans all steps in the story life cycle:

Backlog refinement

Planning

Implementation

Demo

These just-in-time discussions create a shared understanding of the scope that formal documentation cannot provide. Specification by example replaces detailed documentation. Conversations also help uncover gaps in user scenarios and NFRs.

Confirmation – The acceptance criteria provides the information needed to ensure that the story is implemented correctly and covers the relevant functional and NFRs. Figure 5 provides an example. Some teams often use the confirmation section of the story card to write down what they will demo.

Agile teams automate acceptance tests wherever possible, often in business-readable, domain-specific language. Automation creates an executable specification to validate and verify the solution. Automation also provides the ability to quickly regression-test the system, enhancing Continuous Integration, refactoring, and maintenance.

Agile teams spend a significant amount of time discovering, elaborating, and understanding user stories and writing acceptance tests This is as it should be, because it represents the fact that:

Writing the code for an understood objective is not necessarily the hardest part of software development,

rather it is understanding what the real objective for the code is. Therefore, investing in good user stories, albeit at the last responsible moment, is a worthy effort for the team. Bill Wake, coined the acronym INVEST [1], to describe the attributes of a good user story.

I – Independent (among other stories)

N – Negotiable (a flexible statement of intent, not a contract)

V – Valuable (providing a valuable vertical slice to the customer)

E – Estimable (small and negotiable)

S – Small (fits within an iteration)

T – Testable (understood enough to know how to test it)

Download User Story Primer: https://www.scaledagileframework.com/?ddownload=51361

Agile teams use story points and ‘estimating poker’ to value their work [1, 2]. A story point is a singular number that represents a combination of qualities:

Volume – How much is there?

Complexity – How hard is it?

Knowledge – What’s known?

Uncertainty – What’s unknown?

Story points are relative, without a connection to any specific unit of measure. The size (effort) of each story is estimated relative to the smallest story, which is assigned a size of ‘one.’ A modified Fibonacci sequence (1, 2, 3, 5, 8, 13, 20, 40, 100) is applied that reflects the inherent uncertainty in estimating, especially large numbers (e.g., 20, 40, 100) [2].

Agile teams often use ‘estimating poker,’ which combines expert opinion, analogy, and disaggregation to create quick but reliable estimates. Disaggregation refers to splitting a story or features into smaller, easier to estimate pieces. Disaggregation: countable and uncountable.

(Note that there are a number of other methods used as well.) The rules of estimating poker are:

Participants include all team members.

Each estimator is given a deck of cards with 1, 2, 3, 5, 8, 13, 20, 40, 100, ∞, and,?

The PO participates but does not estimate.

The Scrum Master participates but does not estimate unless they are doing actual development work.

For each backlog item to be estimated, the PO reads the description of the story.

Questions are asked and answered.

Each estimator privately selects an estimating card representing his or her estimate.

All cards are turned over at the same time to avoid bias and to make all estimates visible.

High and low estimators explain their estimates.

After a discussion, each estimator re-estimates by selecting a card.

The estimates will likely converge. If not, the process is repeated.

Some amount of preliminary design discussion is appropriate. However, spending too much time on design discussions is often wasted effort. The real value of estimating poker is to come to an agreement on the scope of a story. It’s also fun!

The team’s velocity for an iteration is equal to the sum of the points for all the completed stories that met their Definition of Done (DoD). As the team works together over time, their average velocity (completed story points per iteration) becomes reliable and predictable. Predictable velocity assists with planning and helps limit Work in Process (WIP), as teams don’t take on more stories than their historical velocity would allow. This measure is also used to estimate how long it takes to deliver epics, features, capabilities, and enablers, which are also forecasted using story points.

Capacity is the portion of the team’s velocity that is actually available for any given iteration. Vacations, training, and other events can make team members unavailable to contribute to an iteration’s goals for some portion of the iteration. This decreases the maximum potential velocity for that team for that iteration. For example, a team that averages 40 points delivered per iteration would adjust their maximum velocity down to 36 if a team member is on vacation for one week. Knowing this in advance, the team only commits to a maximum of 36 story points during iteration planning. This also helps during PI Planning to forecast the actual available capacity for each iteration in the PI so the team doesn’t over-commit when building their PI Objectives.

In standard Scrum, each team’s story point estimating—and the resulting velocity—is a local and independent concern. At scale, it becomes difficult to predict the story point size for larger epics and features when team velocities can vary wildly. To overcome this, SAFe teams initially calibrate a starting story point baseline where one story point is defined roughly the same across all teams. There is no need to recalibrate team estimation or velocity. Calibration is performed one time when launching new Agile Release Trains.

Normalized story points provide a method for getting to an agreed starting baseline for stories and velocity as follows:

Give every developer-tester on the team eight points for a two-week iteration (one point for each ideal workday, subtracting 2 days for general overhead).

Subtract one point for every team member’s vacation day and holiday.

Find a small story that would take about a half-day to code and a half-day to test and validate. Call it a ‘one.’

Estimate every other story relative to that ‘one.’

Example: Assuming a six-person team composed of three developers, two testers, and one PO, with no vacations or holidays, then the estimated initial velocity = 5 × 8 points = 40 points/iteration. (Note: Adjusting a bit lower may be necessary if one of the developers and testers is also the Scrum Master.)

In this way, story points are somewhat comparable across teams. Management can better understand the cost for a story point and more accurately determine the cost of an upcoming feature or epic.

While teams will tend to increase their velocity over time—and that’s a good thing— in reality, the number tends to remain stable. A team’s velocity is far more affected by changing team size and technical context than by productivity variations.

Reference: https://www.scaledagileframework.com/system-demo/

The System Demo is a significant event that provides an integrated view of new Features for the most recent Iteration delivered by all the teams in the Agile Release Train (ART). Each demo gives ART stakeholders an objective measure of progress during a Program Increment (PI).

A system demo is a critical event. It’s the method for assessing the Solution’s current state and gathering immediate, Agile Release Train-level feedback from the people doing the work, as well as critical feedback from Business Owners, sponsors, stakeholders, and customers. The demo is the one real measure of value, velocity, and progress of the fully integrated work across all the teams.

Planning for and presenting a useful system demo requires some work and preparation by the teams. But it’s the only way to get the fast feedback needed to build the right solution.

The system demo tests and evaluates the full solution in a production-like context (often staging) to receive feedback from stakeholders. These stakeholders include Business Owners, executive sponsors, other Agile Teams, development management, customers (and their proxies) who provide input on the fitness for purpose for the solution under development. The feedback is critical, as only they can give the guidance the ART needs to stay on course or make adjustments.

The system demo occurs at the end of every Iteration.

The system demo takes place as close to the end of the iteration as possible—ideally, the next day. While that is the goal, there can be some complications that make that timing impractical. Immature continuous integration and Built-in Quality practices can delay the ART’s ability to integrate frequently. Also, each new increment may require extensions to the demo environment including new interfaces, third-party components, simulation tools, and other environmental assets. While the System Team strives to provide the proper demo environment at the end of each iteration, the integration may lag.

The system demo must occur within the time bounds of the following iteration. ARTs must make all the necessary investments to allow the system demo to happen in a timely cadence. In fact, a lagging system demo is often an indicator of larger problems within the ART, such as continuous integration maturity or System Team capacity.

Attendees typically include:

Product Managers and Product Owners, who are usually responsible for running the demo

One or more members of the System Team, who are often responsible for setting up the demo in the staging environment

Business Owners, executive sponsors, customers, and customer proxies

System Architect/Engineering, IT operations, and other development participants

ART Agile team members attend whenever possible

Having a set agenda and fixed timebox helps lower the transaction costs of the system demo. A sample agenda follows:

Briefly, review the business context and the PI Objectives (approximately 5 – 10 minutes)

Briefly, describe each new feature before demoing (about 5 minutes)

Demo each new feature in an end-to-end use case (around 20 – 30 minutes, total)

Identify current risks and impediments

Open discussion of questions and feedback

Wrap up by summarizing progress, feedback, and action items

Here are a few tips to keep in mind for a successful demo:

Timebox the demo to one hour. A short timebox is critical to keep the continuous, biweekly involvement of key stakeholders. It also illustrates team professionalism and system readiness.

Share demo responsibilities among the team leads, Product Owners, and even team members who have new features to demo

Demo using the staging environment

Minimize demo preparation. Demo the working, tested capabilities, not slideware.

Minimize demo presentation time. Demo screen snapshots and pictures where appropriate

Discuss the impact of the current solution on NFRs

Reference: https://www.scaledagileframework.com/iteration-retrospective/

The Iteration Retrospective is a regular event where Agile Team members discuss the results of the Iteration, review their practices, and identify ways to improve.

At the end of each iteration, Agile teams that apply ScrumXP (and many teams who use Kanban) gather for an iteration retrospective. Timeboxed to an hour or less, each retrospective seeks to uncover what’s working well, what isn’t, and what the team can do better next time.

Each retrospective yields both quantitative and qualitative insights. The quantitative review gathers and evaluates any metrics the team is using to measure its performance. The qualitative part discusses the team practices and the specific challenges that occurred during the last iteration or two. When issues have been identified, root cause analysis is performed, potential corrective actions are discussed, and improvement Stories are entered into the Team Backlog.

There are several popular techniques for eliciting subjective feedback on the success of the iteration (also see [1], [3], [4,], [5]):

Individual – Individually write Post-Its and then find patterns as a group

Appreciation – Note whether someone has helped you or helped the team

Conceptual – Choose one word to describe the iteration

Rating – Rate the iteration on a scale of one to five, and then brainstorm how to make the next one a five

Simple – Open a discussion and record the results under three headings

The last is a conventional method, in which the Scrum Master simply puts up three sheets of flipchart paper labeled ‘What Went Well’, ‘What Didn’t’, and ‘Do Better Next Time’, and then facilitates an open brainstorming session. It can be conducted fairly easily, making all accomplishments and challenges visible...

Teams may choose to rotate the responsibility for facilitating retrospectives. If this is done, one of the fun practices is to allow each person to choose the retrospective format when it’s their turn to lead. This not only creates shared ownership of the process, but it also keeps the retrospective fresh. Team members are more likely to remain engaged when formats are new and varied.

Below are some tips for holding a successful iteration retro:

Keep the event timeboxed to an hour or less. Remember, it is repeated every iteration. The goal is to make small, continuous improvement steps.

Pick only one or two things that can be done better next time and add them as improvement stories to the team backlog, targeted for the next iteration. The other opportunities for improvement can always be addressed in future iterations if they continue to surface in retrospectives.

Make sure everyone speaks.

The Scrum Master should spend time preparing the retrospective, as it’s a primary vehicle for improvement.

Focus on items the team can address, not on how others can improve.

To show progress, make sure improvement stories from the previous iteration are discussed either at the Iteration Review or the beginning of the quantitative review.

The retrospective is a private event for the team and should be limited to Agile team members only.

© Scaled Agile, Inc.

Include this copyright notice with the copied content.